Building in Rise 360: Teacher Training Course

This has been a long time coming. Thirteen months in the making!

In some ways, my design process was unusual. Everyone in my cohort at Oregon State University has been meditating on their final mock course, discussing various components in very measured increments with peers who weren’t deeply involved. We were ourselves the designer, writer and SME. No institution was necessarily involved, so decisions were made independently and with little outside consequence. I imagine that if I were on a team, this process would have been 180 degrees different.

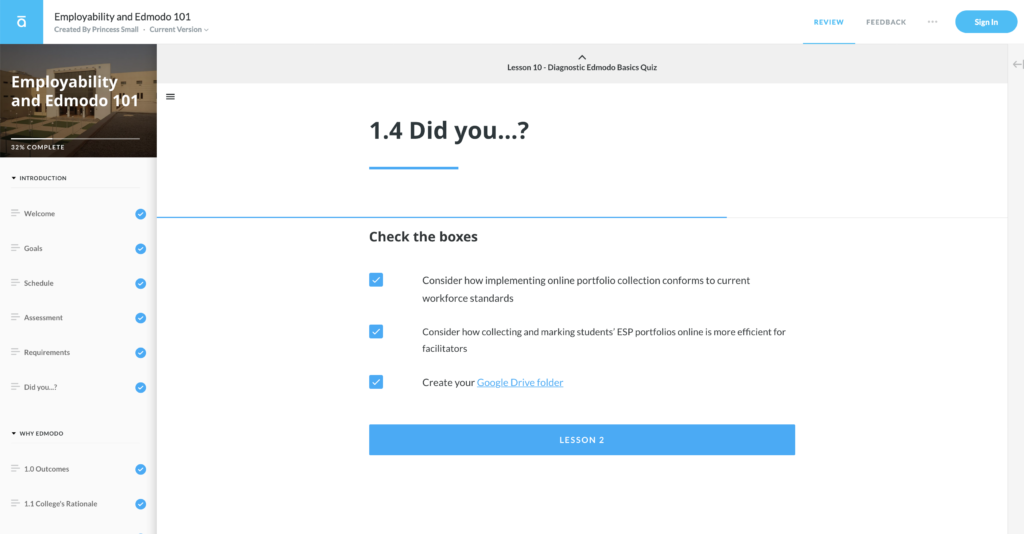

What I ultimately wanted to achieve was a well balanced, straight-forward, reasonably challenging, easy to follow, comprehensive short course on how to use an LMS. I believe I achieved that feat. The initial feedback I’ve gotten has been along those lines. Because of my context, I believe I included sufficient community building exercises while allowing for sufficient autonomy. Flow (scaffolding) is important to me, whether that’s during a grammar lesson, artistic activity, or a whole curriculum. I wanted students to feel guided and supported, and I think my program does that. At the most basic level, I used color and buttons and dividers to help section off the learning objectives. I think that improved the balance and the sense of achieve-ability.

I used Rise 365 to design and publish the course. It was a new experience using an industry-leading authoring tool rather than an LMS. They each have their benefits. A learning resource I hadn’t previously thought to include, but did, was reading a news article that supported the mock institution’s rationale for using this tool I was training the teachers on, and then asking them to confirm that they understood this rationale by choosing the targeted skill. I would actually like to have a better confirmation strategy, but in some ways, Rise 365 is limited in that capacity. It relies too heavily on quiz-like student feedback/engagement. Again, this is neither good nor bad depending on the course, but Rise 365’s lack of constructivist tools is something I hope they fix soon.

That said, I did use a few of its “knowledge check” tools. There is a pre and post-quiz that is self-grading. I also had two or three individual MCQs throughout the course to keep learners on their toes, or at least keep their eyes open. I have yet to check my ability to check their answers. I hope this is the case, or else Rise 365’s usefulness to instructors, in my opinion, would be severely limited.

This course could meet the needs of the institution and learners with very minor adjustments. I am on the fence as to whether or not to use any sort of numerical assessment strategy because of the perception of nitpicking by management and the additional paperwork involved. A benefit would be the measurability and assurance that the new initiative is working or has been sufficiently deployed. These are the kinds of discussions that would have taken place if our mock courses were not mock and we were being held accountable.

Some specific feedback was that the skill check exercise was a little confusing, so might want to clarify the process. Some revisions I need to make are to include a better rubric for the, as of now, numerically assessed assessments and create a better exit survey. The rubric is not nearly as specific and objective as I would prefer. The exit survey is too short and so doesn’t cover aspects of the course I would like to get data on. Also, survey results concurred that my videos did not have CC. Honestly, I may change the videos, get rid of my voice and do an automated voice. Then I could add, effectively copy/paste, the CCs. Thank you, one of my cohort members, for that idea.

Leave a Reply

You must be logged in to post a comment.